How To Install Sun Shade Over Pool

In this tutorial I will describe the required steps for setting upwards a pseudo-distributed, single-node Hadoop cluster backed by the Hadoop Distributed File System, running on Ubuntu Linux.

- Prerequisites

- Sun Java six

- Adding a dedicated Hadoop system user

- Configuring SSH

- Disabling IPv6

- Alternative

- Hadoop

- Installation

- Update $HOME/.bashrc

- Excursus: Hadoop Distributed File System (HDFS)

- Configuration

- hadoop-env.sh

- conf/*-site.xml

- Formatting the HDFS filesystem via the NameNode

- Starting your single-node cluster

- Stopping your single-node cluster

- Running a MapReduce job

- Download example input information

- Restart the Hadoop cluster

- Copy local example information to HDFS

- Run the MapReduce job

- Retrieve the job upshot from HDFS

- Hadoop Web Interfaces

- NameNode Web Interface (HDFS layer)

- JobTracker Web Interface (MapReduce layer)

- TaskTracker Spider web Interface (MapReduce layer)

- What's adjacent?

- Related Links

- Modify Log

Hadoop is a framework written in Java for running applications on large clusters of commodity hardware and incorporates features like to those of the Google File System (GFS) and of the MapReduce computing paradigm. Hadoop's HDFS is a highly fault-tolerant distributed file system and, like Hadoop in full general, designed to be deployed on low-cost hardware. It provides high throughput access to awarding data and is suitable for applications that take large data sets.

The main goal of this tutorial is to get a simple Hadoop installation up and running so that you tin play around with the software and acquire more almost information technology.

This tutorial has been tested with the following software versions:

- Ubuntu Linux 10.04 LTS (deprecated: eight.x LTS, 8.04, 7.10, 7.04)

- Hadoop 1.0.3, released May 2022

Effigy ane: Cluster of machines running Hadoop at Yahoo! (Source: Yahoo!)

Prerequisites

Sun Java 6

Hadoop requires a working Java ane.5+ (aka Java 5) installation. However, using Java 1.6 (aka Java 6) is recommended for running Hadoop. For the sake of this tutorial, I volition therefore describe the installation of Java i.vi.

Important Note: The apt instructions below are taken from this SuperUser.com thread. I got notified that the previous instructions that I provided no longer work. Please be aware that calculation a tertiary-political party repository to your Ubuntu configuration is considered a security risk. If you practise not want to go on with the apt instructions below, feel free to install Sunday JDK vi via alternative means (east.chiliad. past downloading the binary bundle from Oracle) then proceed with the next section in the tutorial.

# Add the Ferramosca Roberto's repository to your apt repositories # Come across https://launchpad.net/~ferramroberto/ # $ sudo apt-get install python-software-properties $ sudo add-apt-repository ppa:ferramroberto/java # Update the source listing $ sudo apt-get update # Install Sun Java six JDK $ sudo apt-get install sun-java6-jdk # Select Sun'southward Java as the default on your machine. # Run across 'sudo update-alternatives --config coffee' for more information. # $ sudo update-coffee-alternatives -s java-6-sunday The full JDK which will be placed in /usr/lib/jvm/java-vi-sun (well, this directory is really a symlink on Ubuntu).

Later installation, brand a quick bank check whether Sunday's JDK is correctly gear up:

user@ubuntu:~# java -version java version "1.six.0_20" Java(TM) SE Runtime Environment (build 1.6.0_20-b02) Java HotSpot(TM) Client VM (build 16.three-b01, mixed mode, sharing) Adding a dedicated Hadoop system user

Nosotros will use a dedicated Hadoop user account for running Hadoop. While that's not required it is recommended considering information technology helps to separate the Hadoop installation from other software applications and user accounts running on the same car (think: security, permissions, backups, etc).

$ sudo addgroup hadoop $ sudo adduser --ingroup hadoop hduser This volition add the user hduser and the group hadoop to your local car.

Configuring SSH

Hadoop requires SSH access to manage its nodes, i.eastward. remote machines plus your local machine if you want to employ Hadoop on information technology (which is what we want to do in this short tutorial). For our unmarried-node setup of Hadoop, we therefore need to configure SSH access to localhost for the hduser user we created in the previous department.

I assume that yous have SSH up and running on your motorcar and configured it to allow SSH public key hallmark. If not, at that place are several online guides available.

First, we have to generate an SSH key for the hduser user.

user@ubuntu:~$ su - hduser hduser@ubuntu:~$ ssh-keygen -t rsa -P "" Generating public/individual rsa key pair. Enter file in which to save the cardinal (/home/hduser/.ssh/id_rsa): Created directory '/habitation/hduser/.ssh'. Your identification has been saved in /dwelling/hduser/.ssh/id_rsa. Your public central has been saved in /dwelling house/hduser/.ssh/id_rsa.pub. The key fingerprint is: 9b:82:ea:58:b4:e0:35:d7:ff:xix:66:a6:ef:ae:0e:d2 hduser@ubuntu The key'south randomart paradigm is: [...snipp...] hduser@ubuntu:~$ The second line will create an RSA cardinal pair with an empty password. More often than not, using an empty password is non recommended, merely in this example information technology is needed to unlock the key without your interaction (you don't want to enter the passphrase every time Hadoop interacts with its nodes).

Second, you accept to enable SSH access to your local machine with this newly created fundamental.

hduser@ubuntu:~$ cat $HOME/.ssh/id_rsa.pub >> $Habitation/.ssh/authorized_keys The final step is to test the SSH setup past connecting to your local automobile with the hduser user. The step is also needed to salve your local car's host key fingerprint to the hduser user'south known_hosts file. If you lot have any special SSH configuration for your local machine like a non-standard SSH port, you can define host-specific SSH options in $HOME/.ssh/config (meet man ssh_config for more than data).

hduser@ubuntu:~$ ssh localhost The authenticity of host 'localhost (::1)' can't be established. RSA key fingerprint is d7:87:25:47:ae:02:00:eb:1d:75:4f:bb:44:f9:36:26. Are you sure yous want to go along connecting (yep/no)? yeah Alarm: Permanently added 'localhost' (RSA) to the list of known hosts. Linux ubuntu 2.vi.32-22-generic #33-Ubuntu SMP Wed Apr 28 thirteen:27:30 UTC 2022 i686 GNU/Linux Ubuntu 10.04 LTS [...snipp...] hduser@ubuntu:~$ If the SSH connect should neglect, these general tips might help:

- Enable debugging with

ssh -vvv localhostand investigate the error in detail. - Check the SSH server configuration in

/etc/ssh/sshd_config, in item the optionsPubkeyAuthentication(which should exist fix toyep) andAllowUsers(if this pick is active, add thehduseruser to it). If you made any changes to the SSH server configuration file, you tin can force a configuration reload withsudo /etc/init.d/ssh reload.

Disabling IPv6

I problem with IPv6 on Ubuntu is that using 0.0.0.0 for the various networking-related Hadoop configuration options will outcome in Hadoop bounden to the IPv6 addresses of my Ubuntu box. In my instance, I realized that at that place's no practical bespeak in enabling IPv6 on a box when you lot are not connected to any IPv6 network. Hence, I merely disabled IPv6 on my Ubuntu machine. Your mileage may vary.

To disable IPv6 on Ubuntu 10.04 LTS, open /etc/sysctl.conf in the editor of your choice and add the following lines to the end of the file:

# /etc/sysctl.conf # disable ipv6 net.ipv6.conf.all.disable_ipv6 = 1 internet.ipv6.conf.default.disable_ipv6 = 1 net.ipv6.conf.lo.disable_ipv6 = 1 Yous have to reboot your machine in order to brand the changes take effect.

You lot tin check whether IPv6 is enabled on your motorcar with the following command:

$ cat /proc/sys/net/ipv6/conf/all/disable_ipv6 A render value of 0 means IPv6 is enabled, a value of i means disabled (that'due south what nosotros want).

Alternative

You can as well disable IPv6 only for Hadoop equally documented in HADOOP-3437. You can do and so by adding the following line to conf/hadoop-env.sh:

# conf/hadoop-env.sh consign HADOOP_OPTS = -Djava.cyberspace.preferIPv4Stack= truthful Hadoop

Installation

Download Hadoop from the Apache Download Mirrors and extract the contents of the Hadoop package to a location of your choice. I picked /usr/local/hadoop. Make sure to change the possessor of all the files to the hduser user and hadoop group, for example:

$ cd /usr/local $ sudo tar xzf hadoop-i.0.3.tar.gz $ sudo mv hadoop-1.0.iii hadoop $ sudo chown -R hduser:hadoop hadoop (Just to give you the thought, YMMV – personally, I create a symlink from hadoop-ane.0.three to hadoop.)

Update $Domicile/.bashrc

Add the post-obit lines to the end of the $HOME/.bashrc file of user hduser. If you utilize a beat out other than bash, you should of course update its advisable configuration files instead of .bashrc.

# $HOME/.bashrc # Fix Hadoop-related environment variables export HADOOP_HOME =/usr/local/hadoop # Set up JAVA_HOME (we will also configure JAVA_HOME directly for Hadoop after on) export JAVA_HOME =/usr/lib/jvm/java-half-dozen-dominicus # Some convenient aliases and functions for running Hadoop-related commands unalias fs &> /dev/null alias fs = "hadoop fs" unalias hls &> /dev/null alias hls = "fs -ls" # If you take LZO compression enabled in your Hadoop cluster and # compress job outputs with LZOP (not covered in this tutorial): # Conveniently inspect an LZOP compressed file from the command # line; run via: # # $ lzohead /hdfs/path/to/lzop/compressed/file.lzo # # Requires installed 'lzop' control. # lzohead () { hadoop fs -cat $1 | lzop -dc | head -1000 | less } # Add Hadoop bin/ directory to PATH export PATH = $PATH:$HADOOP_HOME/bin You can repeat this exercise also for other users who want to apply Hadoop.

Excursus: Hadoop Distributed File System (HDFS)

Before nosotros continue let us briefly learn a bit more about Hadoop'southward distributed file arrangement.

The Hadoop Distributed File System (HDFS) is a distributed file arrangement designed to run on commodity hardware. Information technology has many similarities with existing distributed file systems. Nevertheless, the differences from other distributed file systems are pregnant. HDFS is highly error-tolerant and is designed to be deployed on depression-toll hardware. HDFS provides high throughput access to awarding data and is suitable for applications that have large data sets. HDFS relaxes a few POSIX requirements to enable streaming access to file system data. HDFS was originally congenital as infrastructure for the Apache Nutch spider web search engine project. HDFS is part of the Apache Hadoop projection, which is office of the Apache Lucene project.

The following picture gives an overview of the near important HDFS components.

Configuration

Our goal in this tutorial is a unmarried-node setup of Hadoop. More information of what we do in this section is available on the Hadoop Wiki.

hadoop-env.sh

The only required environment variable we have to configure for Hadoop in this tutorial is JAVA_HOME. Open up conf/hadoop-env.sh in the editor of your option (if y'all used the installation path in this tutorial, the full path is /usr/local/hadoop/conf/hadoop-env.sh) and set the JAVA_HOME environment variable to the Dominicus JDK/JRE 6 directory.

Alter

# conf/hadoop-env.sh # The java implementation to use. Required. # export JAVA_HOME=/usr/lib/j2sdk1.5-sun to

# conf/hadoop-env.sh # The java implementation to utilize. Required. export JAVA_HOME=/usr/lib/jvm/java-half dozen-lord's day Note: If you are on a Mac with OS 10 10.7 y'all tin utilize the following line to set up JAVA_HOME in conf/hadoop-env.sh.

# conf/hadoop-env.sh (on Mac systems) # for our Mac users export JAVA_HOME = `/usr/libexec/java_home` conf/*-site.xml

In this section, nosotros will configure the directory where Hadoop volition store its data files, the network ports it listens to, etc. Our setup will use Hadoop'due south Distributed File System, HDFS, even though our little "cluster" just contains our single local machine.

You can leave the settings below "equally is" with the exception of the hadoop.tmp.dir parameter – this parameter yous must change to a directory of your choice. We will use the directory /app/hadoop/tmp in this tutorial. Hadoop'due south default configurations utilise hadoop.tmp.dir as the base temporary directory both for the local file arrangement and HDFS, so don't be surprised if you see Hadoop creating the specified directory automatically on HDFS at some later indicate.

Now we create the directory and gear up the required ownerships and permissions:

$ sudo mkdir -p /app/hadoop/tmp $ sudo chown hduser:hadoop /app/hadoop/tmp # ...and if you lot desire to tighten upwardly security, chmod from 755 to 750... $ sudo chmod 750 /app/hadoop/tmp If y'all forget to set the required ownerships and permissions, you will see a java.io.IOException when y'all try to format the name node in the adjacent section).

Add together the following snippets betwixt the <configuration> ... </configuration> tags in the respective configuration XML file.

In file conf/core-site.xml:

<!-- conf/core-site.xml --> <property> <name>hadoop.tmp.dir</name> <value>/app/hadoop/tmp</value> <description>A base for other temporary directories.</description> </property> <property> <proper noun>fs.default.name</name> <value>hdfs://localhost:54310</value> <description>The proper noun of the default file system. A URI whose scheme and potency determine the FileSystem implementation. The uri'south scheme determines the config property (fs.SCHEME.impl) naming the FileSystem implementation class. The uri'due south authority is used to decide the host, port, etc. for a filesystem.</description> </belongings> In file conf/mapred-site.xml:

<!-- conf/mapred-site.xml --> <belongings> <proper noun>mapred.job.tracker</proper name> <value>localhost:54311</value> <description>The host and port that the MapReduce job tracker runs at. If "local", then jobs are run in-procedure every bit a single map and reduce task. </description> </holding> In file conf/hdfs-site.xml:

<!-- conf/hdfs-site.xml --> <property> <proper name>dfs.replication</proper name> <value>ane</value> <clarification>Default block replication. The actual number of replications can be specified when the file is created. The default is used if replication is non specified in create time. </description> </property> Run across Getting Started with Hadoop and the documentation in Hadoop's API Overview if you accept whatsoever questions about Hadoop's configuration options.

Formatting the HDFS filesystem via the NameNode

The beginning step to starting upward your Hadoop installation is formatting the Hadoop filesystem which is implemented on top of the local filesystem of your "cluster" (which includes only your local car if you followed this tutorial). You demand to practice this the offset time you set up up a Hadoop cluster.

Do non format a running Hadoop filesystem as you will lose all the information currently in the cluster (in HDFS)!

To format the filesystem (which but initializes the directory specified by the dfs.name.dir variable), run the command

hduser@ubuntu:~$ /usr/local/hadoop/bin/hadoop namenode -format The output will wait like this:

hduser@ubuntu:/usr/local/hadoop$ bin/hadoop namenode -format 10/05/08 16:59:56 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = ubuntu/127.0.i.i STARTUP_MSG: args = [-format] STARTUP_MSG: version = 0.20.ii STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common/branches/co-operative-0.20 -r 911707; compiled by 'chrisdo' on Fri Feb 19 08:07:34 UTC 2022 ************************************************************/ x/05/08 16:59:56 INFO namenode.FSNamesystem: fsOwner=hduser,hadoop 10/05/08 16:59:56 INFO namenode.FSNamesystem: supergroup=supergroup 10/05/08 sixteen:59:56 INFO namenode.FSNamesystem: isPermissionEnabled=truthful ten/05/08 sixteen:59:56 INFO common.Storage: Image file of size 96 saved in 0 seconds. 10/05/08 sixteen:59:57 INFO common.Storage: Storage directory .../hadoop-hduser/dfs/name has been successfully formatted. x/05/08 16:59:57 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at ubuntu/127.0.1.1 ************************************************************/ hduser@ubuntu:/usr/local/hadoop$ Starting your single-node cluster

Run the control:

hduser@ubuntu:~$ /usr/local/hadoop/bin/start-all.sh This will startup a Namenode, Datanode, Jobtracker and a Tasktracker on your car.

The output will look similar this:

hduser@ubuntu:/usr/local/hadoop$ bin/start-all.sh starting namenode, logging to /usr/local/hadoop/bin/../logs/hadoop-hduser-namenode-ubuntu.out localhost: starting datanode, logging to /usr/local/hadoop/bin/../logs/hadoop-hduser-datanode-ubuntu.out localhost: starting secondarynamenode, logging to /usr/local/hadoop/bin/../logs/hadoop-hduser-secondarynamenode-ubuntu.out starting jobtracker, logging to /usr/local/hadoop/bin/../logs/hadoop-hduser-jobtracker-ubuntu.out localhost: starting tasktracker, logging to /usr/local/hadoop/bin/../logs/hadoop-hduser-tasktracker-ubuntu.out hduser@ubuntu:/usr/local/hadoop$ A nifty tool for checking whether the expected Hadoop processes are running is jps (part of Sunday'southward Java since v1.5.0). Come across also How to debug MapReduce programs.

hduser@ubuntu:/usr/local/hadoop$ jps 2287 TaskTracker 2149 JobTracker 1938 DataNode 2085 SecondaryNameNode 2349 Jps 1788 NameNode You lot can besides cheque with netstat if Hadoop is listening on the configured ports.

hduser@ubuntu:~$ sudo netstat -plten | grep coffee tcp 0 0 0.0.0.0:50070 0.0.0.0:* LISTEN 1001 9236 2471/java tcp 0 0 0.0.0.0:50010 0.0.0.0:* Listen 1001 9998 2628/coffee tcp 0 0 0.0.0.0:48159 0.0.0.0:* Mind 1001 8496 2628/coffee tcp 0 0 0.0.0.0:53121 0.0.0.0:* LISTEN 1001 9228 2857/coffee tcp 0 0 127.0.0.1:54310 0.0.0.0:* Heed 1001 8143 2471/java tcp 0 0 127.0.0.1:54311 0.0.0.0:* LISTEN 1001 9230 2857/coffee tcp 0 0 0.0.0.0:59305 0.0.0.0:* LISTEN 1001 8141 2471/coffee tcp 0 0 0.0.0.0:50060 0.0.0.0:* LISTEN 1001 9857 3005/java tcp 0 0 0.0.0.0:49900 0.0.0.0:* LISTEN 1001 9037 2785/java tcp 0 0 0.0.0.0:50030 0.0.0.0:* Listen 1001 9773 2857/java hduser@ubuntu:~$ If there are whatever errors, examine the log files in the /logs/ directory.

Stopping your single-node cluster

Run the command

hduser@ubuntu:~$ /usr/local/hadoop/bin/stop-all.sh to cease all the daemons running on your auto.

Example output:

hduser@ubuntu:/usr/local/hadoop$ bin/stop-all.sh stopping jobtracker localhost: stopping tasktracker stopping namenode localhost: stopping datanode localhost: stopping secondarynamenode hduser@ubuntu:/usr/local/hadoop$ Running a MapReduce chore

Nosotros will at present run your first Hadoop MapReduce job. We will use the WordCount example job which reads text files and counts how oft words occur. The input is text files and the output is text files, each line of which contains a give-and-take and the count of how oft information technology occurred, separated by a tab. More data of what happens behind the scenes is available at the Hadoop Wiki.

Download example input data

We will utilise iii ebooks from Project Gutenberg for this instance:

- The Outline of Science, Vol. 1 (of four) by J. Arthur Thomson

- The Notebooks of Leonardo Da Vinci

- Ulysses by James Joyce

Download each ebook as text files in Patently Text UTF-eight encoding and store the files in a local temporary directory of choice, for instance /tmp/gutenberg.

hduser@ubuntu:~$ ls -50 /tmp/gutenberg/ total 3604 -rw-r--r-- i hduser hadoop 674566 Feb iii 10:17 pg20417.txt -rw-r--r-- 1 hduser hadoop 1573112 Feb 3 x:eighteen pg4300.txt -rw-r--r-- 1 hduser hadoop 1423801 Feb iii x:xviii pg5000.txt hduser@ubuntu:~$ Restart the Hadoop cluster

Restart your Hadoop cluster if it's not running already.

hduser@ubuntu:~$ /usr/local/hadoop/bin/start-all.sh Copy local example information to HDFS

Before nosotros run the actual MapReduce task, nosotros get-go have to copy the files from our local file organisation to Hadoop's HDFS.

hduser@ubuntu:/usr/local/hadoop$ bin/hadoop dfs -copyFromLocal /tmp/gutenberg /user/hduser/gutenberg hduser@ubuntu:/usr/local/hadoop$ bin/hadoop dfs -ls /user/hduser Constitute 1 items drwxr-xr-x - hduser supergroup 0 2022-05-08 17:twoscore /user/hduser/gutenberg hduser@ubuntu:/usr/local/hadoop$ bin/hadoop dfs -ls /user/hduser/gutenberg Found 3 items -rw-r--r-- 3 hduser supergroup 674566 2022-03-10 xi:38 /user/hduser/gutenberg/pg20417.txt -rw-r--r-- 3 hduser supergroup 1573112 2022-03-ten 11:38 /user/hduser/gutenberg/pg4300.txt -rw-r--r-- 3 hduser supergroup 1423801 2022-03-10 eleven:38 /user/hduser/gutenberg/pg5000.txt hduser@ubuntu:/usr/local/hadoop$ Run the MapReduce job

At present, we really run the WordCount instance task.

hduser@ubuntu:/usr/local/hadoop$ bin/hadoop jar hadoop*examples*.jar wordcount /user/hduser/gutenberg /user/hduser/gutenberg-output This control will read all the files in the HDFS directory /user/hduser/gutenberg, process it, and store the result in the HDFS directory /user/hduser/gutenberg-output.

Annotation: Some people run the command higher up and get the following error bulletin:

Exception in thread "main" java.io.IOException: Error opening job jar: hadoop*examples*.jar at org.apache.hadoop.util.RunJar.main (RunJar.java: 90) Acquired by: java.util.aught.ZipException: error in opening zip file

In this case, re-run the control with the full name of the Hadoop Examples JAR file, for instance:

hduser@ubuntu:/usr/local/hadoop$ bin/hadoop jar hadoop-examples-i.0.3.jar wordcount /user/hduser/gutenberg /user/hduser/gutenberg-output

Instance output of the previous command in the console:

hduser@ubuntu:/usr/local/hadoop$ bin/hadoop jar hadoop*examples*.jar wordcount /user/hduser/gutenberg /user/hduser/gutenberg-output 10/05/08 17:43:00 INFO input.FileInputFormat: Full input paths to process : three x/05/08 17:43:01 INFO mapred.JobClient: Running job: job_201005081732_0001 10/05/08 17:43:02 INFO mapred.JobClient: map 0% reduce 0% 10/05/08 17:43:14 INFO mapred.JobClient: map 66% reduce 0% x/05/08 17:43:17 INFO mapred.JobClient: map 100% reduce 0% ten/05/08 17:43:26 INFO mapred.JobClient: map 100% reduce 100% 10/05/08 17:43:28 INFO mapred.JobClient: Job complete: job_201005081732_0001 10/05/08 17:43:28 INFO mapred.JobClient: Counters: 17 10/05/08 17:43:28 INFO mapred.JobClient: Job Counters ten/05/08 17:43:28 INFO mapred.JobClient: Launched reduce tasks=i ten/05/08 17:43:28 INFO mapred.JobClient: Launched map tasks=3 x/05/08 17:43:28 INFO mapred.JobClient: Data-local map tasks=three x/05/08 17:43:28 INFO mapred.JobClient: FileSystemCounters 10/05/08 17:43:28 INFO mapred.JobClient: FILE_BYTES_READ=2214026 10/05/08 17:43:28 INFO mapred.JobClient: HDFS_BYTES_READ=3639512 10/05/08 17:43:28 INFO mapred.JobClient: FILE_BYTES_WRITTEN=3687918 x/05/08 17:43:28 INFO mapred.JobClient: HDFS_BYTES_WRITTEN=880330 10/05/08 17:43:28 INFO mapred.JobClient: Map-Reduce Framework x/05/08 17:43:28 INFO mapred.JobClient: Reduce input groups=82290 ten/05/08 17:43:28 INFO mapred.JobClient: Combine output records=102286 10/05/08 17:43:28 INFO mapred.JobClient: Map input records=77934 10/05/08 17:43:28 INFO mapred.JobClient: Reduce shuffle bytes=1473796 x/05/08 17:43:28 INFO mapred.JobClient: Reduce output records=82290 10/05/08 17:43:28 INFO mapred.JobClient: Spilled Records=255874 10/05/08 17:43:28 INFO mapred.JobClient: Map output bytes=6076267 10/05/08 17:43:28 INFO mapred.JobClient: Combine input records=629187 10/05/08 17:43:28 INFO mapred.JobClient: Map output records=629187 10/05/08 17:43:28 INFO mapred.JobClient: Reduce input records=102286 Cheque if the upshot is successfully stored in HDFS directory /user/hduser/gutenberg-output:

hduser@ubuntu:/usr/local/hadoop$ bin/hadoop dfs -ls /user/hduser Found 2 items drwxr-xr-x - hduser supergroup 0 2022-05-08 17:xl /user/hduser/gutenberg drwxr-xr-10 - hduser supergroup 0 2022-05-08 17:43 /user/hduser/gutenberg-output hduser@ubuntu:/usr/local/hadoop$ bin/hadoop dfs -ls /user/hduser/gutenberg-output Found ii items drwxr-xr-x - hduser supergroup 0 2022-05-08 17:43 /user/hduser/gutenberg-output/_logs -rw-r--r-- 1 hduser supergroup 880802 2022-05-08 17:43 /user/hduser/gutenberg-output/role-r-00000 hduser@ubuntu:/usr/local/hadoop$ If you want to modify some Hadoop settings on the fly like increasing the number of Reduce tasks, y'all can apply the "-D" selection:

hduser@ubuntu:/usr/local/hadoop$ bin/hadoop jar hadoop*examples*.jar wordcount -D mapred.reduce.tasks=16 /user/hduser/gutenberg /user/hduser/gutenberg-output An important note about mapred.map.tasks: Hadoop does not honor mapred.map.tasks across because it a hint. But information technology accepts the user specified mapred.reduce.tasks and doesn't manipulate that. Y'all cannot force mapred.map.tasks but y'all tin can specify mapred.reduce.tasks.

Retrieve the task result from HDFS

To inspect the file, you tin copy information technology from HDFS to the local file system. Alternatively, you can use the command

hduser@ubuntu:/usr/local/hadoop$ bin/hadoop dfs -cat /user/hduser/gutenberg-output/part-r-00000 to read the file direct from HDFS without copying it to the local file system. In this tutorial, we volition copy the results to the local file system though.

hduser@ubuntu:/usr/local/hadoop$ mkdir /tmp/gutenberg-output hduser@ubuntu:/usr/local/hadoop$ bin/hadoop dfs -getmerge /user/hduser/gutenberg-output /tmp/gutenberg-output hduser@ubuntu:/usr/local/hadoop$ head /tmp/gutenberg-output/gutenberg-output "(Lo)cra" 1 "1490 1 "1498," one "35" 1 "forty," 1 "A 2 "Every bit-IS". one "A_ 1 "Absoluti 1 "Alack! 1 hduser@ubuntu:/usr/local/hadoop$ Note that in this specific output the quote signs (") enclosing the words in the head output in a higher place take not been inserted past Hadoop. They are the result of the word tokenizer used in the WordCount instance, and in this case they matched the beginning of a quote in the ebook texts. Just inspect the function-00000 file farther to see it for yourself.

The control fs -getmerge will only concatenate whatsoever files it finds in the directory yous specify. This means that the merged file might (and most likely volition) not be sorted.

Hadoop Spider web Interfaces

Hadoop comes with several spider web interfaces which are by default (see conf/hadoop-default.xml) available at these locations:

- http://localhost:50070/ – web UI of the NameNode daemon

- http://localhost:50030/ – web UI of the JobTracker daemon

- http://localhost:50060/ – web UI of the TaskTracker daemon

These web interfaces provide concise information well-nigh what's happening in your Hadoop cluster. You might desire to give them a try.

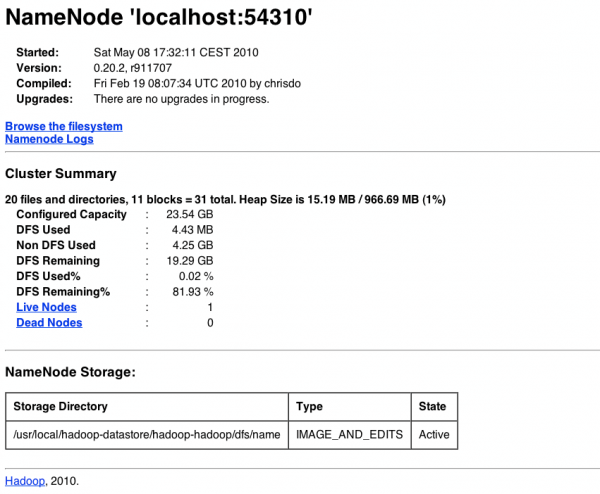

NameNode Web Interface (HDFS layer)

The name node web UI shows you a cluster summary including information about total/remaining capacity, live and dead nodes. Additionally, it allows you to browse the HDFS namespace and view the contents of its files in the web browser. Information technology also gives access to the local machine's Hadoop log files.

By default, information technology's available at http://localhost:50070/.

JobTracker Spider web Interface (MapReduce layer)

The JobTracker web UI provides information nearly general job statistics of the Hadoop cluster, running/completed/failed jobs and a job history log file. It besides gives admission to the ''local auto's'' Hadoop log files (the machine on which the web UI is running on).

By default, it'south available at http://localhost:50030/.

![]()

TaskTracker Web Interface (MapReduce layer)

The task tracker web UI shows you running and non-running tasks. It too gives admission to the ''local auto's'' Hadoop log files.

By default, it's available at http://localhost:50060/.

![]()

What'southward next?

If you're feeling comfortable, you can continue your Hadoop experience with my follow-up tutorial Running Hadoop On Ubuntu Linux (Multi-Node Cluster) where I describe how to build a Hadoop ''multi-node'' cluster with two Ubuntu boxes (this will increase your electric current cluster size by 100%, heh).

In addition, I wrote a tutorial on how to code a elementary MapReduce task in the Python programming language which can serve as the basis for writing your ain MapReduce programs.

From yours truly:

- Running Hadoop On Ubuntu Linux (Multi-Node Cluster)

- Writing An Hadoop MapReduce Program In Python

From other people:

- How to debug MapReduce programs

- Hadoop API Overview (for Hadoop ii.10)

Modify Log

Only important changes to this article are listed here:

- 2011-07-17: Renamed the Hadoop user from

hadooptohduserbased on readers' feedback. This should make the distinction between the local Hadoop user (at presenthduser), the local Hadoop grouping (hadoop), and the Hadoop CLI tool (hadoop) more clear.

Source: https://www.michael-noll.com/tutorials/running-hadoop-on-ubuntu-linux-single-node-cluster/

Posted by: mitchellthemot.blogspot.com

0 Response to "How To Install Sun Shade Over Pool"

Post a Comment